Data and StudySpecification

data(lsoSynth)The data for this example were randomly simulated using the

synthpop package in R based on data originally

collected by Lindo,

Sanders, and Oreopoulos (2010; hereafter LSO). (The original real

data can be found here;

code to simulate the fake data can be found here.)

At a major public university, students with a cumulative grade point average (GPA) below a pre-specified cutoff are placed on academic probation (AP). Students on AP are given extra support, and threatened with suspension if their GPAs do not improve. See LSO for details. Along with LSO, we will consider only students at the end of their first year at the university. The university consisted of three campuses, and they AP cutoff varied by campus. To simplify matters, we centered each student’s first year GPA on their campus’s cutoff, creating a new variable , where is student ’s first-year GPA, and is the AP cutoff for student ’s campus. A student is placed on AP if and avoids AP if .

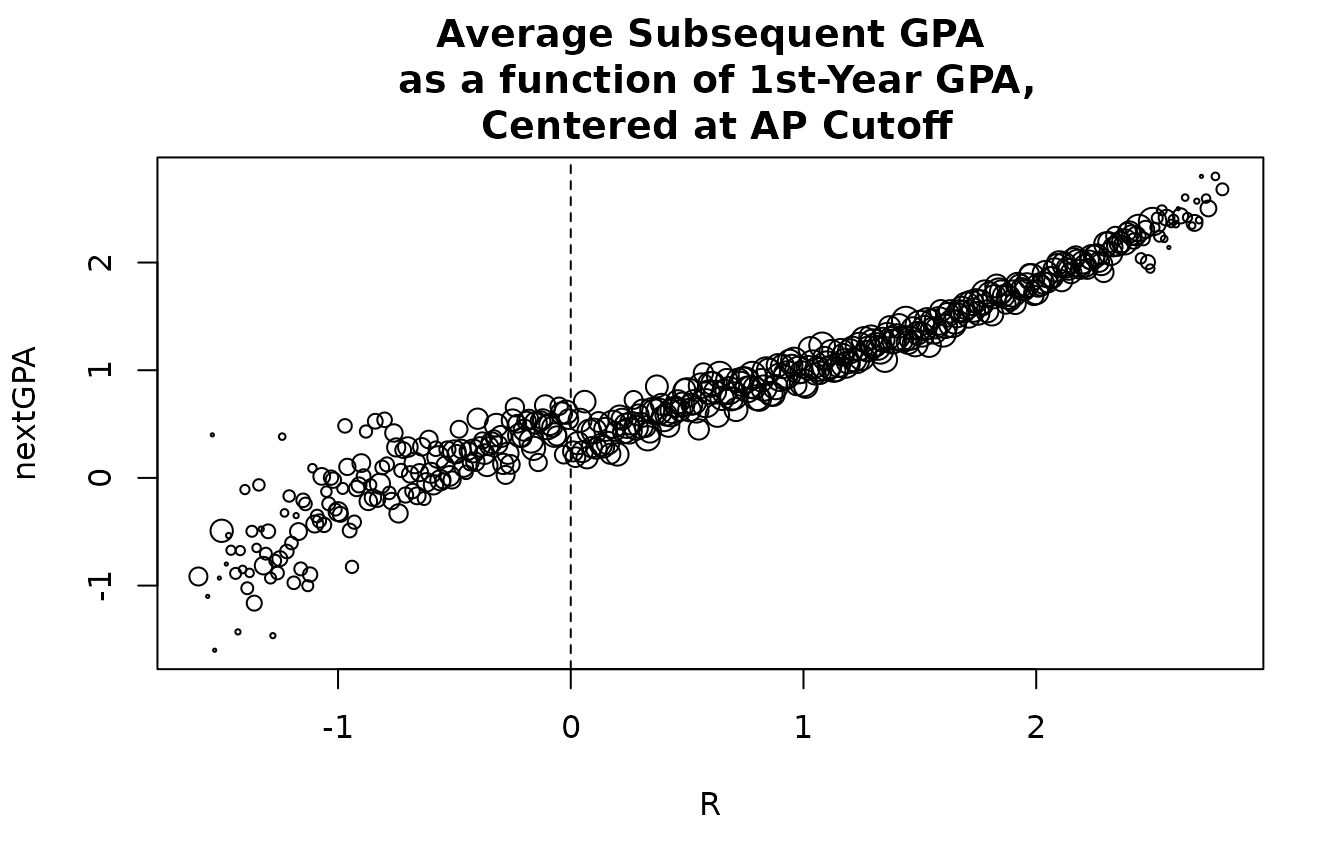

We will attempt to estimate the average effect of AP placement on

nextGPA, students’ subsequent GPA (either over the summer

or in the following academic semester). This figure plots each the

average nextGPA for students with distinct R

values (i.e. centered first-year GPA). The cutoff for AP, 0, is denoted

with a dotted line. The the sizes of the points are proportional to the

natural log of the numbers of students with each unique value of

R.

figDat <- aggregate(lsoSynth[,c('nextGPA','lhsgrade_pct')],by=list(R=lsoSynth$R),

FUN=mean,na.rm=TRUE)

figDat$n <- as.vector(table(lsoSynth$R))

with(figDat,

plot(R,nextGPA,cex=log(n+1)/3,main='Average Subsequent GPA\n as a function of 1st-Year GPA,\n Centered at AP Cutoff'))

abline(v=0,lty=2)

Background

Regression Discontinuity Design

What characterizes discontinuity (RD) design, including the LSO study, is that treatment is assigned as a function of a numeric “running variable” , along with a prespecified cutoff value , so that treatment is assigned to subjects for whom , or for whom . If denote’s ’s treatment assignment, we write or , where is the indicator function–equal to 1 when is true and 0 otherwise. In the LSO study, indexes students, centered first-year GPA $R_i $ is the running variable, and the treatment under study is AP placement. Then $ Z_i={R_i<0} $. (In “fuzzy” RD design, ’s value relative to doesn’t completely determine treatment, but merely affects the probability of treatment, so subjects with, say, are more likely to be treated than those with ; in fuzzy RD design, is typically modeled as an instrument for treatment receipt.)

RD design hold a privileged place in causal inference, because unlike most other observational designs, the mechanism for treatment assignment is known. That is, is the only confounder. On the other hand, since is completely determined by , there are no subjects with the same values for but different values for , so common observational study techniques, such as matching on , are impossible. Instead, it is necessary to adjust for with modeling–typically regression.

Analyzing RD Design with ANCOVA

Traditionally, the typical way to analyze data from RD design is with

ANCOVA, by fitting a regression model such as

where

is the outcome of interest measured for subject

(in our example nextGPA), and

is a random regression error. Then, the regression coefficient for

,

,

is taken as an estimate of the treatment effect. Common methodological

updates to the ANCOVA approach include an interaction term between

and

,

and the substitution semi-parametric regression, such as local linear or

polynomial models, for linear ordinary least squares.

Under suitable conditions, the ANCOVA model is said to estimate a “Local Average Treatment Effect” (LATE). If and are the potential outcomes for , then the LATE is defined as or equivalently where denotes expectation–that is, the LATE is the limit of average treatment effects for subjects with in ever-shrinking regions around .

Among other considerations, the LATE target suggests that data analysts restrict their attention to subjects with falling within a bandwidth of , e.g. only fitting the model to subjects with within a “window of analysis” . A number of methods have been proposed to select , including cross validation, non-parametric modeling of the second derivative of , and specification tests.

The propertee Approach to RD Design

The propertee approach to RD design breaks the data analysis into three steps:

- Conduct design tests and choose a bandwidth , along with, possibly, other data exclusions, resulting in an analysis sample .

- Fit a covariance model , modeling as a function of running variable and (optionally) other covariates . Fitting a covariance model to only the control subjects, as in other design, would entail extrapolation of a model fit to subjects with to subjects with , or vice-versa, which is undesirable. Instead, we fit the covariance model to the full analysis sample , including both treated and untreated subjects. However, since we are interested in modeling , and not or , so rather than fitting , we fit an extended model including a term for treatment assignment. The estimate for is, in essence, a provisional estimate of the treatment effect.

- Let , using only the model for —not including the term for —along with estimated in step 2. Then estimate the average treatment effect for subjects in using the difference in means estimator:

The estimator will be consistent if the model , with as the probability limit for , successfully removes all confounding due to , i.e. for .

Analyzing an RD design in propertee

Determining the window of analysis

There is an extensive literature on determining the appropriate window of analysis for an RD design See, for instance, Imbens and Kalyanaraman (2012) and Sales and Hansen (2020). This stage of the analysis is beyond the scope of this vignette, and does not require the propertee package. For the purpose of this example, we will focus our analysis on .

Initializing the RD Design Object

In general, propertee specification objects require

users to specify a unit of assignment variable, that corresponds to the

level of treatment assignment–in other words, which units were assigned

between conditions. This is necessary both for combining results across

different models or datasets, and for estimating correct

specification-based standard errors. In lsoSynth, there are

no clusters, and individual students are assigned to academic probation

on an individual basis. The dataset does not include an ID variable, but

any variable that takes a unique value for each row will do. We will use

row-names.

lsoSynth$id <- rownames(lsoSynth)Defining an RD design requires, at minimum, identifying the running variable(s) , as well as how determines treatment assignment. In our example,

Modeling as a function of

We will consider two potential models of as a function of . First, the standard OLS model:

##this works, but it's annoying to enter in the subset expression a 2nd time:

g1 <- lm(nextGPA ~ R + Z, data = lsoSynth, subset = abs(R) <= 0.5)The second is a bounded-influence polynomial model of the type recommended in Sales & Hansen (2020):

g2 <-

if(requireNamespace("robustbase", quietly = TRUE)){

robustbase::lmrob(nextGPA~poly(R,5)+Z,data=lsoSynthW)

} else g1[Put something here evaluating them?]

Estimating Effects

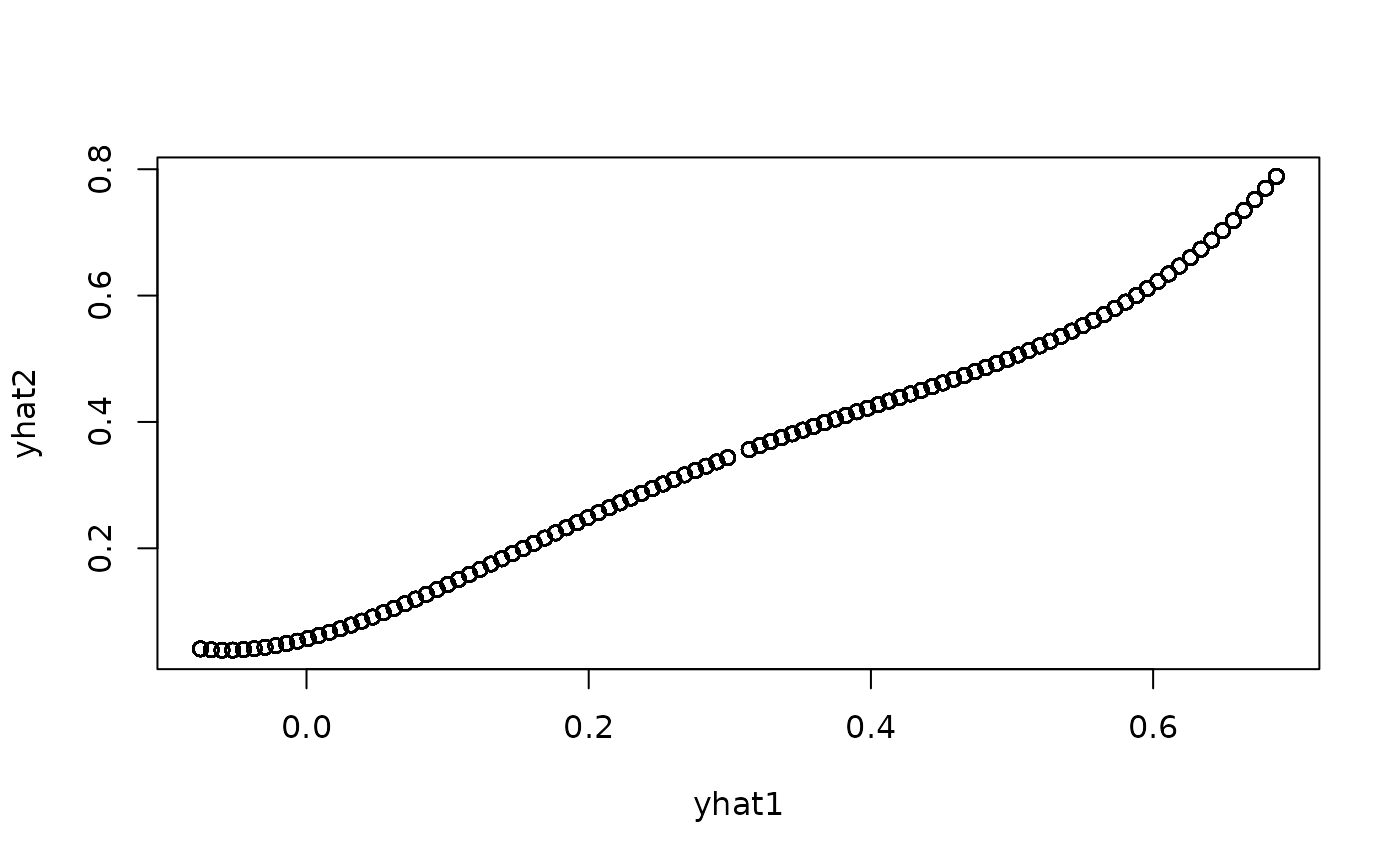

yhat1 <- predict(g1,data.frame(R=forcings(spec)[[1]],Z=FALSE))

yhat2 <- predict(g2,data.frame(R=forcings(spec)[[1]],Z=FALSE))

plot(yhat1,yhat2)